Visualizing Adversarial Attacks

Pixelwise classifiers with Large-margin Gaussian Mixture representation

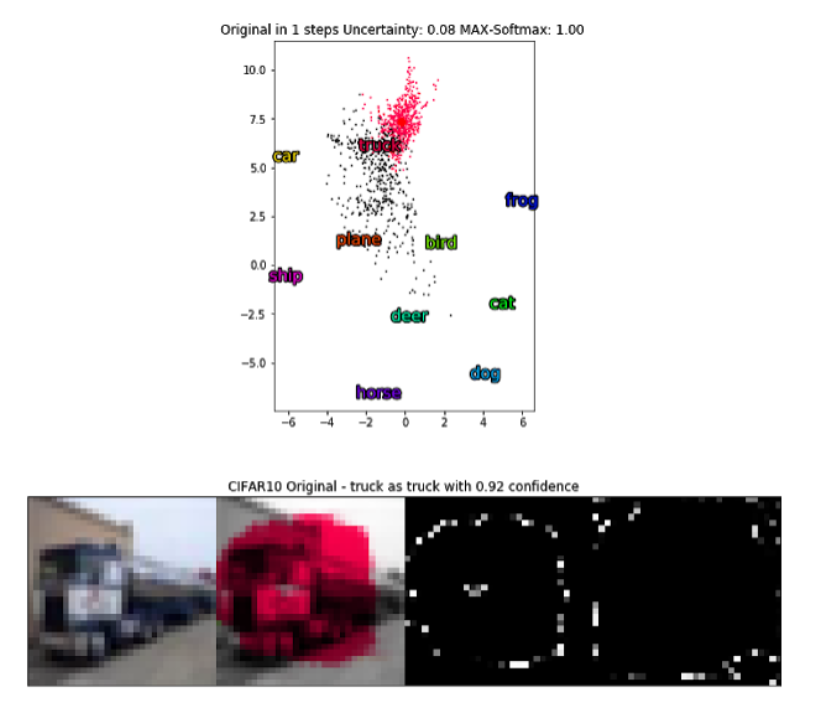

These are several visualizations I dug up from really really messy code, which I won't share just yet. This was some quick and dirty work to test out the feasibility of an idea: I wanted to visualize the effects on the representation space at each pixel during an FGSM attack. I trained a weak segmentor model with the representation space modified for L-GM. I found L-GM useful because it provides a sensible way of directly learning a representation that can be visualized without further solving an additional optimization problem (looking at you T-SNE!). Not to mention you can project new points into it, which was really central to my purpose of observing the effects of an adversarial attack.

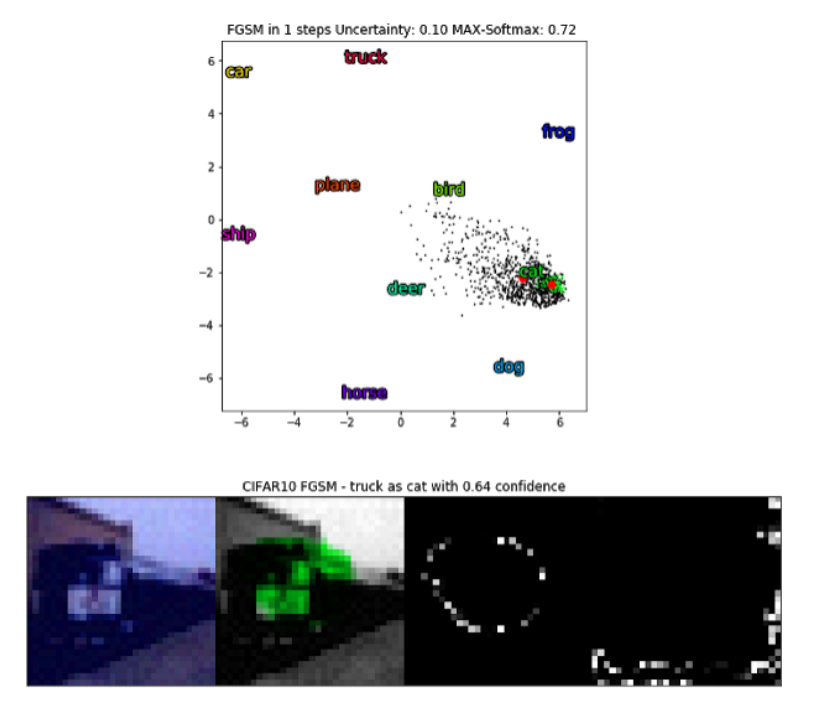

The max-softmax, which is a good baseline for confidence in detection tasks drops from 1.00 to 0.72 after adversarial modification. I also computed a confidence term that looked for regions of "uncertainty", which I am using loosely. Specifically I was interested in regions close to 0.5 when using sigmoid on the raw logits and the logits post temperature scaling. I saw that when the model is behaving well there is a uniform expansion of the uncertain regions spatially resulting in little overlap between the raw and temperature scaled regions. So by multiplying both taking the spatial average I can determine the overall uncertainy and by additive inverse get a confidence measure for observing adversarial images spatially.

In this case it appears that FGSM just messed with the image's high contrast regions on the cab of the truck. My confidence measure drops to 0.64 from 0.92, but the detection still clears the 0 threshold sigmoid learned.

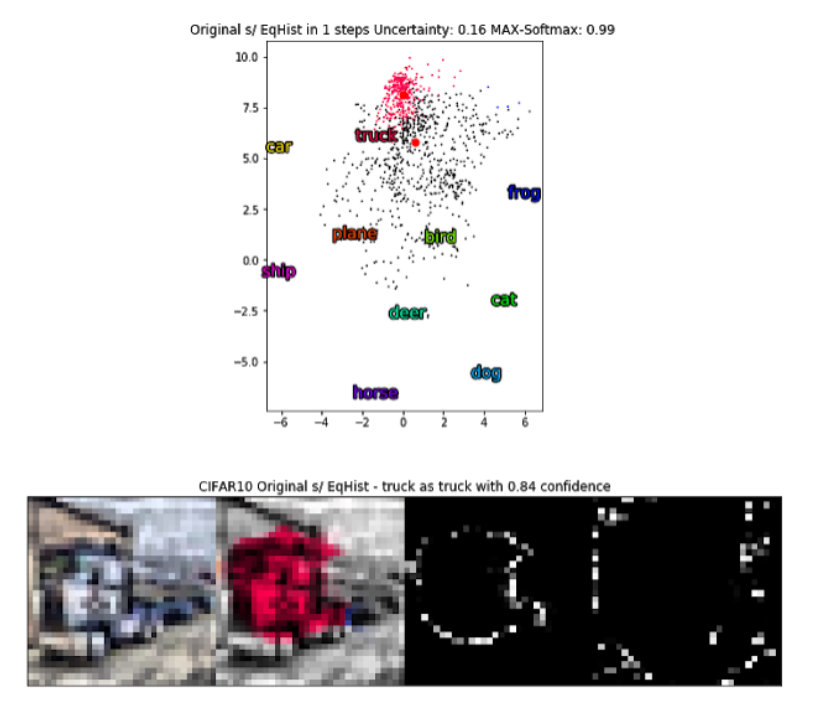

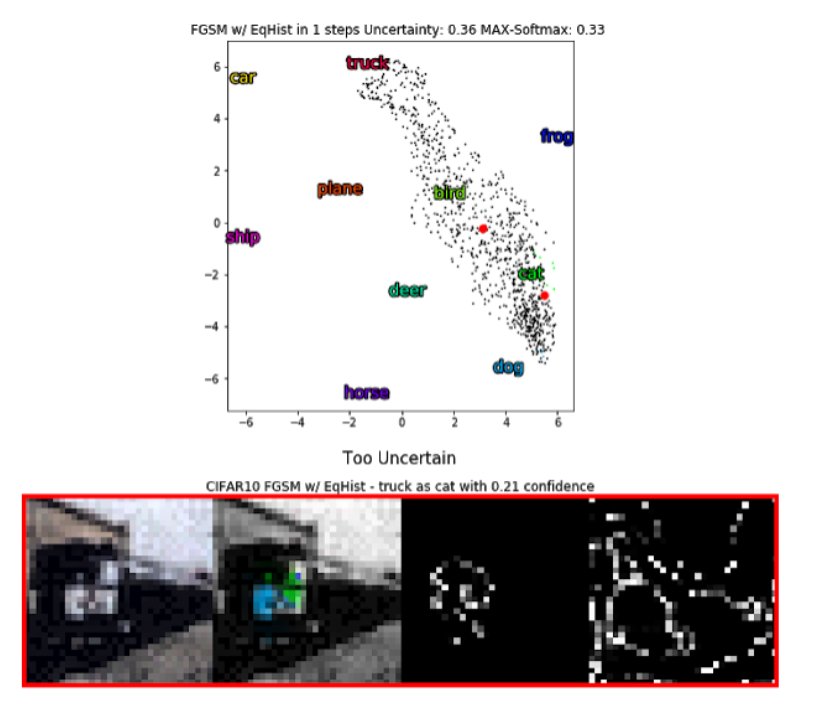

When CLAHE is applied to both images we can see some interesting effects.

On the original image, my confidence measure drops from 0.92 to 0.84 and the pixel embedding spreads out far more.

On the FGSM image, my confidence measure plummets from 0.64 to 0.21. Interestingly, the pixel embedding stretches back toward truck.